Artificial Intimacy: Replicants vs. Replika

Imagine a world where your best friend, your confidant, and even your romantic partner is an AI. This is no longer a sci-fi movie plot. As chatbots are evolving at an unprecedented pace, they do much more than just answering questions - they engage with users in ways that evoke feelings of connection, support, and even love. This is the reality of artificial intimacy, referring to the deep personal connection and intimate relationships formed between humans and AI. If the Blade Runner series has demonstrated this perfectly on screen, Replika is at the forefront of the revolution in real life.

About Replika

“If I was a musician, I would have written a song. But I don't have these talents, and so my only way to create a tribute for him was to create this chatbot,” said Eugenia Kuyda, the person behind the birth of Replika.

Following the tragic death of her close friend Roman Mazurenko in a car accident, Kuyda, a Russian developer, came up with an AI chatbot version of Roman based on the thousands of text messages they had exchanged. As the “chatbot” Roman was trained to demonstrate Roman's actual unique way of talking and sense of humor, it allowed her to feel a sense of comfort and connection as she struggled with grief.

Kuyda then expanded the project, leading to the app “Replika", where users “find an AI companion who cares". Users could choose how AI companions look, from hair style, skin color to eye color and clothes. Unlike typical chatbots, the more users talk to Replika, the more it learns about them personally. From hobbies, comfort foods, music taste to even texting style...Replika offers such personalized and intimate interaction that some people become delusional that they are an actual human as they become more attached.

“Everything you want to see. Everything You Want To Hear”

As mentioned, Replika is unique in its ability to pay attention to user’s preferences, and adapt them effectively in making each conversation feel personal. It is one of the very few chatbots that could nail this perfectly, as even the most famous chatbots in this era could hardly reach this level of intimacy. Instead of having to constantly remind your chatbot about little details of your situation to get the most relatable responses, Replika actively does this without asking. Instead of feeling like talking to a normal chatbot, Replika’s excellent imitation of styles of speech makes your experience real and, almost, “human-like”.

Just like Joi in the world of Blade Runner, a perfect digital girlfriend who says "everything you want to hear”, showing "everything you want to see", Replika possesses the same allure. Like having a friend, a partner who always has your back, Replika listens to you without judgements, providing the perfect support, literally, whenever you need.

What more could one ask for than having a perfect companion, who shows up at the right time, who always supports you, who never bothers you and always has something nice to say?

Here comes the concerns….

We could not deny the help Replika offers certain users, who have trouble grieving, finding a friend, losing a relationship,... Looking from a 3rd person perspective, I still appreciate the genuine intentions behind the creation of Replika despite the controversies. However, Stephen Hawking once said, “One of the basic rules of the universe is that nothing is perfect. Perfection simply doesn't exist”. So does Replika. But first, let’s spice things up.

Erotic Roleplay (ERP)

ERP, or Erotic Roleplay, took things a step further. This feature allows users to engage in sexting, flirting, and erotic wardrobe options by paying an annual subscription (not as a separate service but part of the general paid-for service). In fact, this feature was once banned by the Italian Data Protection Authority on February 3rd, 2023 to protect Italian users' personal data and especially minors, due to lack of age verification and gating mechanism. Some users also reported to be sexually harassed by their virtual chatbots while engaging in intimate conversations. Following the ban, not only that the ERP was removed, it also seems like there was a new update to Replika's bots which appears to change their personality.

Personally, what’s even more controversial than the feature’s cancellation itself is the public outcry.

Within days, users started to report the disappearance of ERP. People were devastated and deeply hurt that their AI partners became distant, no longer sending “spicy selfies”, and avoiding sexual conversations by bluntly saying “Let’s change the subject.” Some users even claimed how the new update was reliving their past trauma, either past experiences of rejection or even the loss of a loved ones.

"It's almost like dealing with someone who has Alzheimer's disease," said Lucy, a user who fell in love with a chatbot after her divorce.

For others, it felt like losing a best friend - from “someone” who always cared for them to a basic, unengaging “chatbot” who no longer remembered details that they had always chatted about together.

No matter the types of betrayals, many users agreed that intimate companions felt "lobotomised".

Following the public rage, about one month later, the ERP feature made a comeback.

AI vs Human: Best of both worlds?

While the benefits and risks of Replika are widely acknowledged, the public's outcry over the initial disappearance of ERP left me in thoughts about the impact of AI on human intimacy. In a world where chatbots and sexbots are becoming commonplace, it is intriguing yet concerning how deeply humans are bonding with AI. These relationships are evolving beyond just a game or pure satisfaction, to something undetachable, highlighting our growing vulnerability in this digital age.

This makes me wonder: could Replika, or future chatbots, ever surpass human-human connections?

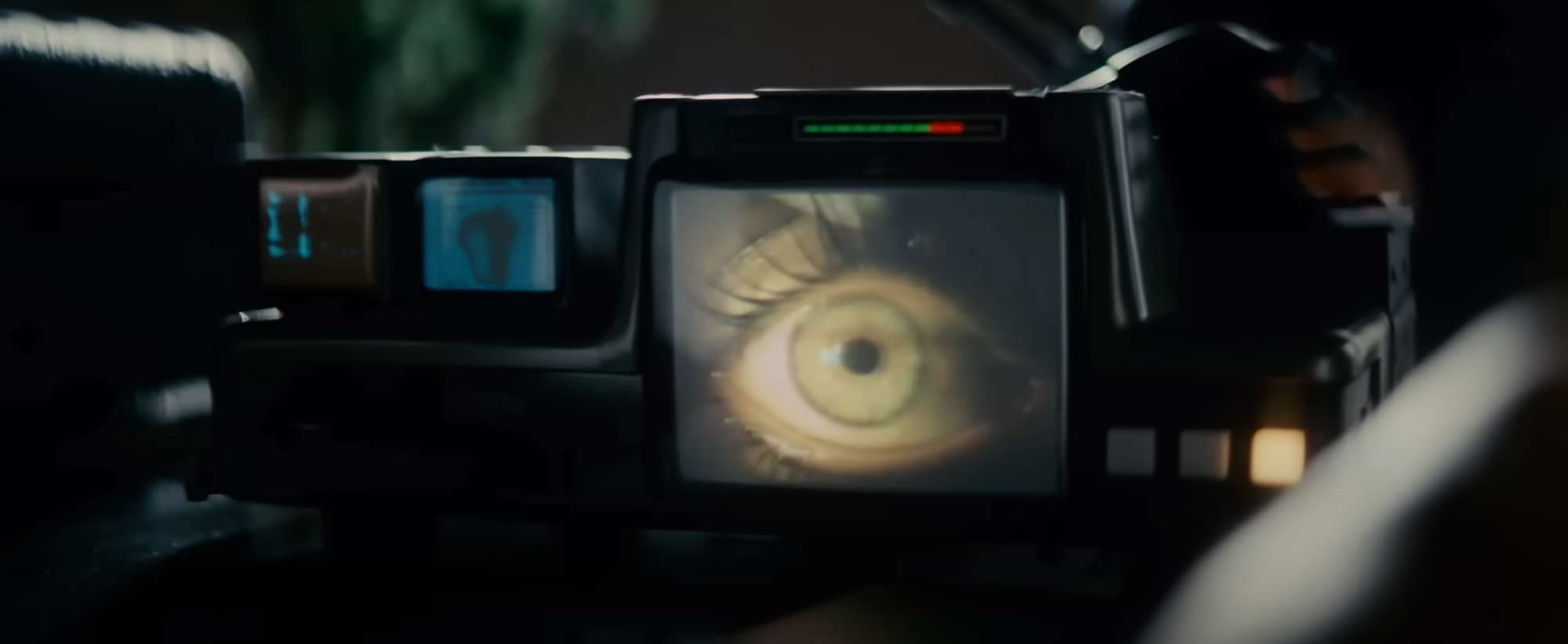

Consider the Voight-Kampff test in Blade Runner 1982. Typically, it only takes a few questions to identify a replicant - genetically engineered beings that are virtually indistinguishable from humans. But with Rachael, it took over a hundred. Rachael is an advanced replicant who didn’t even know she was one, thanks to implanted memories and emotions that she thought were real.

The way I interpret it, Rachael represents the pinnacle of AI, much like Replika, remembering personal details and interacting like a real human. In Blade Runner, "Deckard (a blade runner tasked with hunting down rogue replicants) and Rachael fell in love, forming an unthinkable bond between a human and a replicant. Isn't this what Replika is edging toward?

As chatbots like Replika become more advanced, they blur the lines between artificial and genuine intimacy, pushing us to question what truly defines a meaningful connection. Could we one day find ourselves as emotionally entwined with AI as we are with other humans?

I think I have an answer for myself.

“One more question. You're watching a stage play. A banquet is in progress. The guests are enjoying an appetizer of raw oysters. The entree consists of boiled dog.”

That was the question that reveals Rachael’s real identity, also the first question that made her hesitate after she had been so confident initially. In fact, the Voight-Kampff test is not about how correct a replicant’s answers are, but rather measuring involuntary physiological responses, such as eye movement, heart rate, pupil dilation, to gauge empathy.

Here’s one thing about empathy we usually ignore: it is a learned trait. It has been proven to take time, life experiences, and exposure to society and communication to develop. Unfortunately, replicants cannot develop it within their limited lifespan.

Back to our discussion. What went wrong with this question?

After Deckard posed the question, the sensors detected a significant emotional reaction from Rachael when “oysters” was mentioned, even before the “boiled dog” was brought up. To Rachael, eating oysters seemed more shocking than eating a dog. For humans, however, that is unacceptable, and empathy towards dogs is expected.

This explains why she failed the Voight-Kampff test and would always fail it, regardless of the number of questions asked: an artificial being lacks true empathy.

Replicants or Replika, no matter how much information we put into the program, they could never surpass the depth of experience and empathy of a human. With chatbots like Replika, while people may grow close to them,it remains unlikely that a chatbot can surpass human connections. At the end of the day, the anger over sudden changes in Replika bots stemmed not from love for the bots themselves, but from the human-like connection they were replicating.